It’s June 2023 and Victor has been spending most of his days at what he calls his “second home,” on East 126th Street, between Park and Lexington avenues, in East Harlem. A dozen or so men congregate outside, some sifting through belongings in a plastic bag or texting on their phone, others sitting on folding chairs or stools, playing cards, smoking, talking or just watching passersby. As an unhoused person in New York City, Victor says OnPoint NYC, a nonprofit organization that opened two overdose prevention centers in November 2021, provides him a “sense of community” he can’t get elsewhere.

Inside, Victor, who provided only his first name when I talked to him last June, will go through reception and into a back room. He’ll fill out a form that provides the information OnPoint needs to make sure he doesn’t die. The form asks for his name and time of arrival, what drug he’ll be consuming and how he’ll consume it. From a list that includes meth, marijuana, cocaine, crack, benzos, fentanyl, speedball and many more, he checks heroin, which he’ll inject. At the bottom, the form asks: “If you weren’t using here now, where would you have gone to use?” Options include the street, sidewalk, between cars, under a bridge, a park, a public restroom, a subway station, your own place (Victor doesn’t have one), a friend’s place or “other.” And it asks if he’d be using alone.

“Yes” is a common answer to that last question. That’s why OnPoint NYC exists. Its two locations, the one in East Harlem and one in the Washington Heights neighborhood, are the only officially sanctioned overdose prevention centers, or OPCs, operating in the United States. People bring drugs they’ve obtained elsewhere and use them under the supervision of trained staff who can provide sterile supplies for drug use and can respond to overdoses.

The approach remains highly controversial in the United States, with critics pointing out that the sites are sanctioning, if not encouraging, illegal drug use. What’s more, critics are concerned that OPCs increase crime, local drug use and public nuisance in the area. This opposition is just one of the challenges alongside many legal, social, financial and logistical barriers for an OPC trying to open and remain open.

“I understand what it sounds like, right? You’re gonna allow people to use drugs on your site,” says Sam Rivera, executive director of OnPoint NYC. “When people question whether it’s good or it gets people well, showing them is what gives them the answer. The answer is yes, of course it does.”

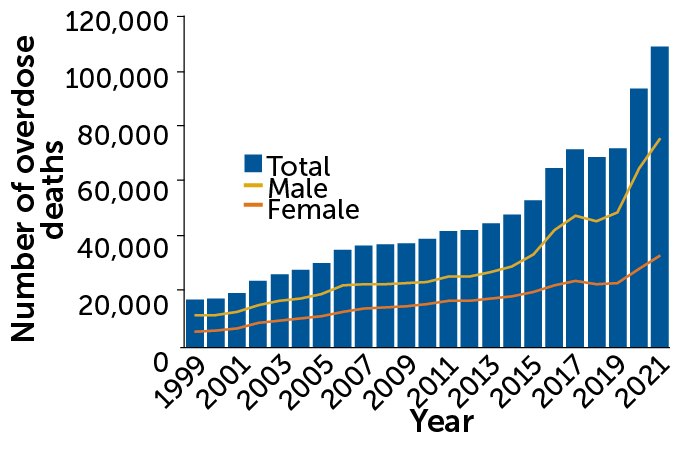

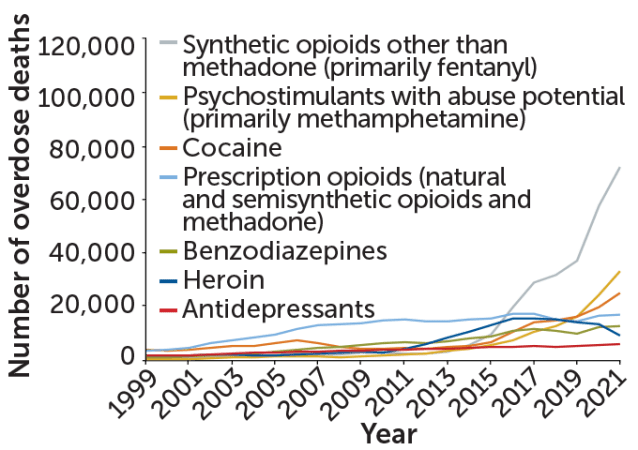

The United States had more than 106,000 drug overdose deaths in 2021, the most recent year for which complete data are available. That’s more per capita than other high-income countries with available data. The vast majority of those deaths involve opioids, including prescription opioid medications and heroin, but predominantly synthetic opioids such as fentanyl. Annual deaths from opioid overdoses have more than doubled since 2015.

“We obviously need to figure out what alternative interventions we can provide to people to prevent them from dying,” says Nora Volkow, director of the National Institute on Drug Abuse in Bethesda, Md. “It’s crucial.”

After Congress directed that institute along with the Centers for Disease Control and Prevention to conduct a report in 2021 on the potential public health impact of OPCs, the agencies’ findings noted “the consistent observation that initial objections to OPCs from local stakeholders tend to disappear following their implementation.”

OPCs have existed around the world for decades. Research has shown that they meet many of their primary goals: reducing overdose deaths, health care costs, the use of emergency services, emergency room visits, hospital stays, public drug use, infectious disease from nonsterile needles, and drug-related litter, such as used syringes. The sites also let people test their drugs to find out what they actually include. Many sites provide additional services aimed at improving overall health — infectious disease screening or testing, wound care, substance use treatment referrals and other programs that meet health care or social needs.

There’s been growing interest in the United States as well. A 2017 study estimated that an OPC in Baltimore that would cost $1.8 million a year to run would save the city $7.8 million a year in health care costs, but Maryland’s legislature has yet to authorize one. A center operated in San Francisco for nearly 11 months in 2022 before shutting down due to political backlash. Last year, the state of Minnesota and the city council of Somerville, Mass., each set aside money for OPCs. Additional sites have been proposed or are under consideration in Seattle, Denver, Philadelphia and elsewhere.

OnPoint has become a model for proposed sites across the United States. Researchers are analyzing its data, alongside data from other countries, to assess how OPCs might fare in a country without universal access to health care, with limited social safety nets, and with more drug use and overdose deaths.

In April 2023, the National Institutes of Health awarded the first portion of a grant, expected to total more than $5 million over four years, to researchers who will assess the effectiveness and costs of OPCs based on data from OnPoint and another site approved by the Rhode Island legislature and slated to open this year. That data could help shape how future centers operate and what services they offer, as well as how the nation approaches drug use more generally.

For Victor, the benefits of OnPoint go far beyond the immediate services provided. “It’s them treating you and looking at you as a person, because most people, most places you go, once you tell them you’re doing drugs, they have an idea of who you are already, a stigma,” he says.

Fostering community can be key to recovery, Volkow says: “That building of trust and a sense of acceptance and belonging is really the first step that can make a person want to go to treatment.”

For Rivera, the experience at the center is, “for lack of a better term, a lovefest.” Though he says his staff never initiates conversations about detox, treatment, rehab or recovery, they nevertheless have those conversations every day with people who come to the center.

What is harm reduction?

The first OPC opened in Bern, Switzerland, in 1986, and today there are more than 140 legally-sanctioned OPCs in more than a dozen countries, including Australia, Canada, Mexico and across Europe. Since Canada opened North America’s first OPC, Insite, in Vancouver in 2003, it’s added dozens more sites around the country, plus more “pop-up” mobile spots run out of tents or campers. OPCs are also known by other names, such as supervised injection sites, drug consumption facilities and safe consumption sites. But regardless of what you call them, the philosophy is the same: harm reduction.

Harm reduction “focuses on improving the health and reducing the negative health outcomes for individuals,” says Elizabeth Samuels, an emergency and addiction medicine physician at UCLA. “At its most basic level, it’s treating people with respect, dignity and autonomy,” and giving them info and tools “to keep themselves and their loved ones safe.” Laws requiring seat belt use in cars are harm reduction tactics. So are adding filters on cigarettes and distributing condoms to prevent pregnancy and the spread of sexually transmitted infections.

Samuels says there’s plenty of evidence that harm reduction strategies work to reduce drug-related problems. Yet in the United States, such interventions — providing safe, sterile drug consumption equipment, for example — are often stigmatized or criminalized. The current approach of punishing people who use drugs is a carry-over from the failed “war on drugs,” she says, “but it remains pervasive in the American psyche and in some portions of the general population.” We know addiction is a disease, not a moral issue, she says. “Pushing people underground and making them feel shame,” she adds, increases risky drug-related behaviors, such as sharing needles, which can transmit bloodborne diseases like HIV and hepatitis C.

Barriers to OPCs in the United States are financial (for example, who is going to fund them?), logistical (where will they be located?) and social (will communities accept them?). But the biggest hurdle has been legal. In a section often called the “crack house statute,” the Anti-Drug Abuse Act of 1986 makes it a felony to “knowingly open, lease, rent, use or maintain any place, whether permanently or temporarily, for the purpose of manufacturing, distributing or using any controlled substance.” Crack, a form of cocaine that is nearly always smoked, has come with harsher penalties than other forms of the drug. Cocaine in crack form has historically been perceived as more prevalent in Black communities, which has contributed to racial injustices.

A nonprofit called Safehouse tested this law in 2019, attempting to open an OPC in Philadelphia. The effort kicked off a court battle, and in 2021, the Third Circuit Court of Appeals ruled that the proposed OPC would violate the statute. Safehouse continues to explore its legal options.

Meanwhile, harm reduction advocates in New York were growing desperate as people died from overdoses — more than 2,000 in New York City during 2020 alone. New York Harm Reduction Educators in East Harlem and the Washington Heights Corner Project, both harm reduction social services organizations, had been running syringe exchange programs and offering related services in the city since the early 1990s. Representatives from these groups had done the logistical groundwork to open an OPC and had the support of city hall. A 2018 feasibility study conducted by the city’s health department and funded by the New York City Council suggested that opening four OPCs in New York City could prevent up to 130 deaths a year and save $7 million annually in public health care costs.

In the early days of 2021, the New York groups had a choice to make. The Safehouse ruling, from a different federal appellate court than the one overseeing New York, showed the potential legal risks of opening an OPC. But after President Joe Biden took office and listed “enhancing evidence-based harm reduction efforts” as a drug policy priority, the groups decided to move forward, merged into OnPoint NYC and opened two new sites.

“Our people are dying, and we know we have the medicine, the apparatus, everything we need to keep people alive, and they don’t have to die,” says Rivera, who was named as one of Time magazine’s most influential people of 2023.

While most of OnPoint’s extra services receive funding through city and federal grants, the overdose prevention and drug supplies services are funded through private dollars, a mixture of individuals, nonprofit organizations and foundations.

So far, OnPoint hasn’t been challenged in court, but the Anti-Drug Abuse Act statute remains a major deterrent to building more centers, Samuels says. Lack of public funding and community resistance are also barriers. The vast number of people dying has changed the climate somewhat, she says. More people are seeking all the “evidence-based tools in our toolbox to prevent any further loss of life.”

What does existing OPC research show?

Along the top of the back wall in the safe consumption area at OnPoint in East Harlem, where Victor uses his heroin, blue painted letters announce: “THIS SITE SAVES LIVES.” And below it in Spanish, “ESTE SITIO SALVA VIDAS.” Below are two defibrillators, each with plushies on top, including a Pokémon Psyduck, a gray puppy and even one shaped like a grinning bottle of naloxone, a medication used to reverse opioid overdoses. Two crash carts are ready to go if the staff notice someone slumped over, becoming discolored or otherwise showing potential signs of an overdose, which happens about three to five times a week, says Alsane Mezon, a harm reduction specialist at OnPoint.

Existing research on OPCs, which comes primarily from Insite in Vancouver and the Uniting Medically Supervised Injection Centre in Sydney, suggests the sites do save lives. The first major systematic review, published in 2014 in Drug and Alcohol Dependence, included a study looking at overdose deaths in Vancouver before and after Insite opened in September 2003. Nearly 90 overdose deaths occurred within 500 meters of the site in the period from January 1, 2001 to December 31, 2005, with the fatal overdose rate declining by 35 percent after the opening. That’s compared with a 9 percent reduction over the same time period in the rest of Vancouver.

In a study of the area around the OPC in Sydney, the average monthly number of ambulance calls for opioid-related overdoses in the hours the center was open, which numbered in the hundreds, decreased by 80 percent after its opening. The decrease was more dramatic than what was seen in the rest of the state of New South Wales. None of the studies included in the 2014 review or a more recent one from 2021 documented any death from overdose inside an OPC.

Despite concerns from critics, the reviews also found no increase in crime, drug trafficking or drug use–related public nuisance associated with the OPCs but did document reductions in syringe litter and public drug use. And when it comes to concerns about the sites encouraging drug use, one study from the Vancouver site showed no increase in relapse rates or the overall number of people in the area who used drugs, nor a drop in those starting methadone therapy.

Neither review linked OPCs to a decline in the number of people who injected drugs, but four studies of the Vancouver site and one of the Sydney site suggested an association between visiting OPCs and the likelihood of being referred to addiction treatment or entering a detox program. The 2021 review, published in the American Journal of Preventive Medicine, found frequent use of OPCs increased the rate of accessing treatment by 1.4 to 1.7 times compared with those who used drugs but visited OPCs less frequently or not at all.

A study of the Vancouver site calculated that, after accounting for the cost of running the site, it saved 14 million Canadian dollars in medical costs over a decade, including prevention of 1,191 new HIV and 54 new hepatitis C infections.

Early results from OnPoint appear consistent with previous findings. OnPoint staff and NYC health department employees reported in JAMA Network Open that during OnPoint’s first two months of operation, 613 people used services a total of nearly 6,000 times across both sites, most often for injecting heroin or fentanyl. As seen in Vancouver and Sydney, most visitors were male, and just over a third were unhoused. Center staff responded 125 times to an overdose or near-overdose, with EMS being called five times and three people transported to the emergency department. OnPoint has not recorded any overdose deaths within its walls since it opened.

Three-quarters of people who went to OnPoint said they would have used drugs in a public place. About half of those who went accessed other services there: picking up naloxone to have on hand, going to counseling, receiving medical care or a holistic service such as acupuncture.

Until OnPoint opened, the only peer-reviewed research on OPCs in the United States came from an underground site that opened in 2014 in an unnamed location. In a research letter reported in 2020 in the New England Journal of Medicine, Alex Kral, a behavioral health epidemiologist based in the San Francisco Bay Area with the nonprofit research institute RTI International, and colleagues evaluated the site’s first five years of operation. Out of 10,514 drug injections, 33 opioid-related overdoses occurred on-site and all were reversed with naloxone, with no deaths or transfers to medical facilities.

A separate study by Kral and colleagues, reported in Drug and Alcohol Dependence in 2021, looked at police reports of incidents in the area around the underground site and at two comparison sites without OPCs for five years before and five years after the site’s opening. Drug incidents had been declining around the OPC before opening and continued to decline afterward, suggesting the site had no negative impact. The analysis also found a decrease, rather than an increase, in crime around the OPC site.

Kral, who is not aware of other underground sites in the United States, also studied the OPC that opened in San Francisco in January 2022 and remained open through December of that year. In addition to safe consumption booths, the site offered on-site buprenorphine treatment (to treat opioid use disorder), legal services and even recreational activities such as karaoke competitions. That site reversed 333 opioid overdoses, about one per day it was open. Kral’s team analyzed data on general nuisance and drug-related nuisance within a 500-meter radius around the OPC and around a similar comparison area elsewhere in San Francisco. The analysis suggested, contrary to claims often made by critics, a reduction in nuisance overall, and no increase in drug-related nuisance or homelessness.

Similarly, a separate group of researchers, unaffiliated with OnPoint NYC, recently reported data showing no significant change in violent or property crimes, 911 calls for crime or medical incidents or 311 calls related to drug use in the immediate six-block areas around the OnPoint OPCs.

The small amount of U.S. research has already started to inform policy, Kral says, pointing to the Rhode Island and Minnesota legislatures’ decisions to authorize the opening of OPCs. “We are seeing politicians take what can be a political risk to do this, and I think our data is part of the reason for that,” he says.

What will the new U.S. study test?

Still, the existing research isn’t without limitations. All of the studies are observational, meaning they can show correlations but cannot attribute benefits directly to the OPCs. Many other factors might play a role in local crime rates, medical service utilization, homelessness, infectious disease spread and so on.

OPCs are also far from homogenous. Though the systematic reviews found that OPCs reduce overdose deaths locally and do not come with increases in local drug use or crime, the 2021 review noted that not much research exists in “resource-poor and politically diverse settings.” Drug use and structural factors, such as law enforcement practices and stigma around drug use, differ across different regions. Assessments of the value of the OPC-linked social services, which themselves vary widely, are also limited.

All this leaves a big question open: Can OPCs dramatically reduce harm in the United States, a country with a lot of drug use and among the highest overdose mortality rates in the world?

The new study funded by the National Institutes of Health through the National Institute on Drug Abuse could help answer that question by studying two OPCs over five years. Researchers from New York University will look at both OnPoint sites, and Brown University researchers will focus on the OPC that is set to open in Providence later this year.

That Providence site, in the process of hiring a medical director and finalizing the location, will be funded by $3.25 million allocated from lawsuit settlements between the state and opioid manufacturers, distributors and pharmacies, as well as with money from private foundations and donors, says Annajane Yolken, director of strategy at Project Weber/RENEW, a nonprofit that is helping establish the site. None of the NIH research money will go toward the center’s operating expenses.

The study, part of the NIH harm reduction research network, will look at four outcome types: the impact on people who use the facilities, based on surveys and health records; effects on neighborhoods, including crime, public attitudes and economics; qualitative findings from interviews with OPC staff and clients; and the costs, of running the site versus health care savings, for example.

“We are first and foremost scientists — we’re not advocates — so our task is to bring the highest level of scientific rigor to these questions, and we’re hoping that the science can inform policy,” says Magdalena Cerda, the NYU epidemiologist leading the OnPoint portion of the study.

“There are some unique aspects of the U.S. context that justify the need for this kind of study,” says Brandon Marshall, the Brown University epidemiologist leading the Providence portion. Most countries with OPCs have universal health care, and OPCs are funded through that system. The United States doesn’t have that structure, which often means Americans engage with health care differently than people in other countries. “Here, health care provided at an OPC might be the first time someone is experiencing compassionate, low-threshold and free health care,” Marshall says.

Barriers to health care, particularly for chronic pain or mental health conditions, are likely one reason drug use is worse in the United States than in other countries, Kral says. Volkow also points to the “tremendous social disparities” in the United States.

“Health care provided at an OPC might be the first time someone is experiencing compassionate, low-threshold and free health care.”

Brandon Marshall

Social inequities and the lack of a social safety net in the United States may influence how big a difference OPCs can make in reducing overdose deaths, Samuels adds. There’s also the punitive treatment of drug use, including its criminalization, and an aversion to harm reduction strategies compared with other countries with OPCs, Marshall says. He adds that addressing these issues is additionally challenging because of the racist roots of many U.S. drug policies.

What works in Canada and Australia may turn out not to work in the United States, and success may vary across U.S. locations too. One key strength of the two-site study is how much New York City and Providence differ from each other.

“One of the real values of our study is the fact that it leverages two very different contexts, a very urban, dense context of New York City and then the less urban, more suburban Providence,” Cerda says. “Being able to compare those contexts will hopefully give us some more generalizable insights.”

Another big difference will be the services provided. “If you’re going to open an overdose prevention center, then you have to think about all of these wraparound services,” Rivera says. Once people are there, they can have a decent meal, take a nap, meet with a case manager and more.

Cerda refers to OnPoint as the “Cadillac” of OPCs, because it offers so many wraparound services. The plan for the Providence site does not include as many of those services, but the site will be located alongside a treatment program. That could be a benefit for access to treatment, or it might make people more uncomfortable going there.

“We know that the more people use an OPC, the more likely they are to enter into some kind of addiction treatment program broadly,” Marshall says, “but we don’t really know at a more granular level what that looks like.”

A need to pair data with stories

When I met Rivera at his office at OnPoint in June, he was wearing torn gray jeans and a plain gray T-shirt that said in bold white letters: “HEALTH JUSTICE FOR ALL.” He’s a physically large presence, and with his thick, tattooed arms and hands adorned with silver and turquoise jewelry, he might seem intimidating if not for his kind eyes and inviting demeanor.

prevention centers. According to a recent report, in their first year of operation, the sites were used more than 48,000 times by more than 2,800 people, with OnPoint staff intervening 636 times to prevent overdoses from becoming fatal.T. HAELLE

Hanging on the wall behind the desk in his office, cluttered with knickknacks both practical and decorative, is a plastic plaque commemorating the documentary Clean Needles Save Lives. The 1991 film tells the story of the illegal needle exchange program, run by the activist group AIDS Coalition to Unleash Power, or ACT UP, that was established in response to the AIDS epidemic.

Rivera defines “health justice for all” as access to health care without barriers — “an opportunity for someone who’s actively using drugs to use safely and have supplies that are clean and healthy. That’s health care,” he says. “Quite frankly, many drug users don’t have access to health care in the way they need it and deserve it.”

No one expects OPCs to solve the entire drug problem in the United States. For example, sites typically do not allow pregnant individuals or those under 18 to use their services, and women may not feel as welcome at many sites given that the people using OPCs are predominantly male, many with a history of incarceration.

Even for those who do visit the sites, there are barriers. Some people have difficulty injecting themselves, but most sites do not allow someone to help another person inject. Another potential barrier is a lack of smoke rooms — OnPoint has these but many OPCs do not — which is an equity issue because it excludes people who use drugs in this way.

Despite the limitations, Samuels says, OPCs have shown they help people and they save lives. “That’s meaningful in itself,” she says, “and part of a comprehensive, multimodal strategy to address the overdose crisis.”

Jonathan Giftos, an addiction medicine physician and the former assistant commissioner of the NYC Bureau of Alcohol and Drug Use Prevention, Care and Treatment, similarly regards OPCs as one piece of a bigger picture. While the city does not provide funding for OnPoint’s OPC services, the bureau where Giftos worked serves as the city’s liaison with the center, which does receive city funds for some of its extra services.

“No one service solves all problems, and they don’t necessarily replace or supplant other important things, like prevention or treatment or recovery spaces,” says Giftos, now chief of ambulatory care at NYC Health + Hospitals/Woodhull. “As we evaluate their impact, it’s important that we interpret the results through that lens and not think that because they didn’t solve every single problem facing a community, that they’re not effective.”

In his qualitative research from the underground site, Kral regularly heard that people using the site didn’t have friends and felt disconnected from the community. OPCs allow vulnerable people who have been stigmatized by society and burdened by shame to “actually be themselves for a moment” and to develop relationships that encourage them to make decisions “about the kinds of things they want to change in their life,” he says. These centers offer possibilities that can’t be measured in overdose or infectious disease rates.

“No one service solves all problems, and they don’t necessarily replace or supplant other important things.”

Jonathan Giftos

“The way I have been able to really help people is with empathy, respect and love,” says Mezon, the OnPoint harm reduction specialist. She is a medical assistant, but she says her personal interactions with people at the center are just as important as her clinical tasks. “When I come in, I tell them, ‘First you’re human. We’re going to show you respect,’ and that really changes the narrative.” Mezon says people come to the OPC from as far away as Long Island, Rochester, N.Y., and New Jersey not only because they can get a shower and test their drugs for fentanyl and other substances, but also because they know they will be treated with compassion.

She speaks about her work as a calling. “I have to walk this dark forest every day to find these beautiful flowers that get lost,” she says. “I’m just really grateful to have all walks of life here. This situation does not discriminate, so I’m here to help…. All the things that they’re not getting out there, we’re trying to give them in here.”

Marshall says a lot of work needs to be done to destigmatize addiction and emphasize the humanity of people affected by the overdose crisis. He believes that data and scientific research need to be paired “with the human perspective.”

Edward Krumpotich agrees. A drug policy consultant based in Grand Rapids, Minn., Krumpotich spent a lifetime battling addiction himself and lost his brother to a heroin overdose. He has also helped write half a dozen harm reduction bills in three states, including the 2023 legislation in Minnesota that authorized funding for an OPC.

“Many times, we get stuck in certain statistics. That doesn’t tell the whole tale of how this crisis is happening,” he says. “I think what it’s going to take is when community members realize that their next-door neighbor or their family member is somebody who suffers with this disease. I think when people realize that people like myself, who have been to 30-plus treatments, now write nation-leading law, it can happen to anybody.”

Marshall says personal narratives can change people’s hearts and minds. “Some of the strongest voices are people with lived experience who can really humanize this issue and explain how the crisis has personally affected them,” he says, “and how things like harm reduction enabled them to live happy, healthy lives.”