This post was originally published on this site

Science, some would say, is an enterprise that should concern itself solely with cold, hard facts. Flights of imagination should be the province of philosophers and poets.

On the other hand, as Albert Einstein so astutely observed, “Imagination is more important than knowledge.” Knowledge, he said, is limited to what we know now, while “imagination embraces the entire world, stimulating progress.”

So with science, imagination has often been the prelude to transformative advances in knowledge, remaking humankind’s understanding of the world and enabling powerful new technologies.

And yet while sometimes spectacularly successful, imagination has also frequently failed in ways that retard the revealing of nature’s secrets. Some minds, it seems, are simply incapable of imagining that there’s more to reality than what they already know.

On many occasions scientists have failed to foresee ways of testing novel ideas, ridiculing them as unverifiable and therefore unscientific. Consequently it is not too challenging to come up with enough failures of scientific imagination to compile a Top 10 list, beginning with:

10. Atoms

By the middle of the 19th century, most scientists believed in atoms. Chemists especially. John Dalton had shown that the simple ratios of different elements making up chemical compounds strongly implied that each element consisted of identical tiny particles. Subsequent research on the weights of those atoms made their reality pretty hard to dispute. But that didn’t deter physicist-philosopher Ernst Mach. Even as late as the beginning of the 20th century, he and a number of others insisted that atoms could not be real, as they were not accessible to the senses. Mach believed that atoms were a “mental artifice,” convenient fictions that helped in calculating the outcomes of chemical reactions. “Have you ever seen one?” he would ask.

Apart from the fallacy of defining reality as “observable,” Mach’s main failure was his inability to imagine a way that atoms could be observed. Even after Einstein proved the existence of atoms by indirect means in 1905, Mach stood his ground. He was unaware, of course, of the 20th century technologies that quantum mechanics would enable, and so did not foresee powerful new microscopes that could show actual images of atoms (and allow a certain computing company to drag them around to spell out IBM).

9. Composition of stars

Mach’s views were similar to those of Auguste Comte, a French philosopher who originated the idea of positivism, which denies reality to anything other than objects of sensory experience. Comte’s philosophy led (and in some cases still leads) many scientists astray. His greatest failure of imagination was an example he offered for what science could never know: the chemical composition of the stars.

Unable to imagine anybody affording a ticket on some entrepreneur’s space rocket, Comte argued in 1835 that the identity of the stars’ components would forever remain beyond human knowledge. We could study their size, shapes and movements, he said, “whereas we would never know how to study by any means their chemical composition, or their mineralogical structure,” or for that matter, their temperature, which “will necessarily always be concealed from us.”

Within a few decades, though, a newfangled technology called spectroscopy enabled astronomers to analyze the colors of light emitted by stars. And since each chemical element emits (or absorbs) precise colors (or frequencies) of light, each set of colors is like a chemical fingerprint, an infallible indicator for an element’s identity. Using a spectroscope to observe starlight therefore can reveal the chemistry of the stars, exactly what Comte thought impossible.

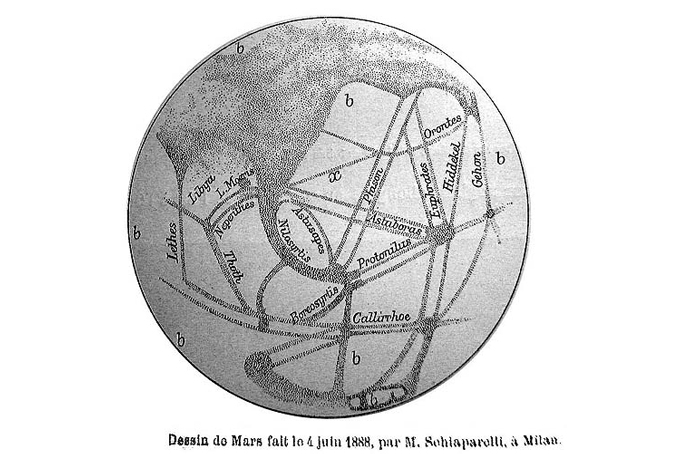

8. Canals on Mars

Sometimes imagination fails because of its overabundance rather than absence. In the case of the never-ending drama over the possibility of life on Mars, that planet’s famous canals turned out to be figments of overactive scientific imagination.

First “observed” in the late 19th century, the Martian canals showed up as streaks on the planet’s surface, described as canali by Italian astronomer Giovanni Schiaparelli. Canali is, however, Italian for channels, not canals. So in this case something was gained (rather than lost) in translation — the idea that Mars was inhabited. “Canals are dug,” remarked British astronomer Norman Lockyer in 1901, “ergo there were diggers.” Soon astronomers imagined an elaborate system of canals transporting water from Martian poles to thirsty metropolitan areas and agricultural centers. (Some observers even imagined seeing canals on Venus and Mercury.)

With more constrained imaginations, aided by better telescopes and translations, belief in the Martian canals eventually faded. It was merely the Martian winds blowing dust (bright) and sand (dark) around the surface in ways that occasionally made bright and dark streaks line up in a deceptive manner — to eyes attached to overly imaginative brains.

7. Nuclear fission

In 1934, Italian physicist Enrico Fermi bombarded uranium (atomic number 92) and other elements with neutrons, the particle discovered just two years earlier by James Chadwick. Fermi found that among the products was an unidentifiable new element. He thought he had created element 93, heavier than uranium. He could not imagine any other explanation. In 1938 Fermi was awarded the Nobel Prize in physics for demonstrating “the existence of new radioactive elements produced by neutron irradiation.”

It turned out, however, that Fermi had unwittingly demonstrated nuclear fission. His bombardment products were actually lighter, previously known elements — fragments split from the heavy uranium nucleus. Of course, the scientists later credited with discovering fission, Otto Hahn and Fritz Strassmann, didn’t understand their results either. Hahn’s former collaborator Lise Meitner was the one who explained what they’d done. Another woman, chemist Ida Noddack, had imagined the possibility of fission to explain Fermi’s results, but for some reason nobody listened to her.

6. Detecting neutrinos

In the 1920s, most physicists had convinced themselves that nature was built from just two basic particles: positively charged protons and negatively charged electrons. Some had, however, imagined the possibility of a particle with no electric charge. One specific proposal for such a particle came in 1930 from Austrian physicist Wolfgang Pauli. He suggested that a no-charge particle could explain a suspicious loss of energy observed in beta-particle radioactivity. Pauli’s idea was worked out mathematically by Fermi, who named the neutral particle the neutrino. Fermi’s math was then examined by physicists Hans Bethe and Rudolf Peierls, who deduced that the neutrino would zip through matter so easily that there was no imaginable way of detecting its existence (short of building a tank of liquid hydrogen 6 million billion miles wide). “There is no practically possible way of observing the neutrino,” Bethe and Peierls concluded.

But they had failed to imagine the possibility of finding a source of huge numbers of high-energy neutrinos, so that a few could be captured even if almost all escaped. No such source was known until nuclear fission reactors were invented. In the 1950s, Frederick Reines and Clyde Cowan used reactors to definitely establish the neutrino’s existence. Reines later said he sought a way to detect the neutrino precisely because everybody had told him it wasn’t possible to detect the neutrino.

5. Nuclear energy

Ernest Rutherford, one of the 20th century’s greatest experimental physicists, was not exactly unimaginative. He imagined the existence of the neutron a dozen years before it was discovered, and he figured out that a weird experiment conducted by his assistants had revealed that atoms contained a dense central nucleus. It was clear that the atomic nucleus packed an enormous quantity of energy, but Rutherford could imagine no way to extract that energy for practical purposes. In 1933, at a meeting of the British Association for the Advancement of Science, he noted that although the nucleus contained a lot of energy, it would also require energy to release it. Anyone saying we can exploit atomic energy “is talking moonshine,” Rutherford declared. To be fair, Rutherford qualified the moonshine remark by saying “with our present knowledge,” so in a way he perhaps was anticipating the discovery of nuclear fission a few years later. (And some historians have suggested that Rutherford did imagine the powerful release of nuclear energy, but thought it was a bad idea and wanted to discourage people from attempting it.)

4. Age of the Earth

Rutherford’s reputation for imagination was bolstered by his inference that radioactive matter deep underground could solve the mystery of the age of the Earth. In the mid-19th century, William Thomson (later known as Lord Kelvin) calculated the Earth’s age to be something a little more than 100 million years, and possibly much less. Geologists insisted that the Earth must be much older — perhaps billions of years — to account for the planet’s geological features.

Kelvin calculated his estimate assuming the Earth was born as a molten rocky mass that then cooled to its present temperature. But following the discovery of radioactivity at the end of the 19th century, Rutherford pointed out that it provided a new source of heat in the Earth’s interior. While giving a talk (in Kelvin’s presence), Rutherford suggested that Kelvin had basically prophesized a new source of planetary heat.

While Kelvin’s neglect of radioactivity is the standard story, a more thorough analysis shows that adding that heat to his math would not have changed his estimate very much. Rather, Kelvin’s mistake was assuming the interior to be rigid. John Perry (one of Kelvin’s former assistants) showed in 1895 that the flow of heat deep within the Earth’s interior would alter Kelvin’s calculations considerably — enough to allow the Earth to be billions of years old. It turned out that the Earth’s mantle is fluid on long time scales, which not only explains the age of the Earth, but also plate tectonics.

3. Charge-parity violation

Before the mid-1950s, nobody imagined that the laws of physics gave a hoot about handedness. The same laws should govern matter in action when viewed straight-on or in a mirror, just as the rules of baseball applied equally to Ted Williams and Willie Mays, not to mention Mickey Mantle. But in 1956 physicists Tsung-Dao Lee and Chen Ning Yang suggested that perfect right-left symmetry (or “parity”) might be violated by the weak nuclear force, and experiments soon confirmed their suspicion.

Restoring sanity to nature, many physicists thought, required antimatter. If you just switched left with right (mirror image), some subatomic processes exhibited a preferred handedness. But if you also replaced matter with antimatter (switching electric charge), left-right balance would be restored. In other words, reversing both charge (C) and parity (P) left nature’s behavior unchanged, a principle known as CP symmetry. CP symmetry had to be perfectly exact; otherwise nature’s laws would change if you went backward (instead of forward) in time, and nobody could imagine that.

In the early 1960s, James Cronin and Val Fitch tested CP symmetry’s perfection by studying subatomic particles called kaons and their antimatter counterparts. Kaons and antikaons both have zero charge but are not identical, because they are made from different quarks. Thanks to the quirky rules of quantum mechanics, kaons can turn into antikaons and vice versa. If CP symmetry is exact, each should turn into the other equally often. But Cronin and Fitch found that antikaons turn into kaons more often than the other way around. And that implied that nature’s laws allowed a preferred direction of time. “People didn’t want to believe it,” Cronin said in a 1999 interview. Most physicists do believe it today, but the implications of CP violation for the nature of time and other cosmic questions remain mysterious.

2. Behaviorism versus the brain

In the early 20th century, the dogma of behaviorism, initiated by John Watson and championed a little later by B.F. Skinner, ensnared psychologists in a paradigm that literally excised imagination from science. The brain — site of all imagination — is a “black box,” the behaviorists insisted. Rules of human psychology (mostly inferred from experiments with rats and pigeons) could be scientifically established only by observing behavior. It was scientifically meaningless to inquire into the inner workings of the brain that directed such behavior, as those workings were in principle inaccessible to human observation. In other words, activity inside the brain was deemed scientifically irrelevant because it could not be observed. “When what a person does [is] attributed to what is going on inside him,” Skinner proclaimed, “investigation is brought to an end.”

Skinner’s behaviorist BS brainwashed a generation or two of followers into thinking the brain was beyond study. But fortunately for neuroscience, some physicists foresaw methods for observing neural activity in the brain without splitting the skull open, exhibiting imagination that the behaviorists lacked. In the 1970s Michel Ter-Pogossian, Michael Phelps and colleagues developed PET (positron emission tomography) scanning technology, which uses radioactive tracers to monitor brain activity. PET scanning is now complemented by magnetic resonance imaging, based on ideas developed in the 1930s and 1940s by physicists I.I. Rabi, Edward Purcell and Felix Bloch.

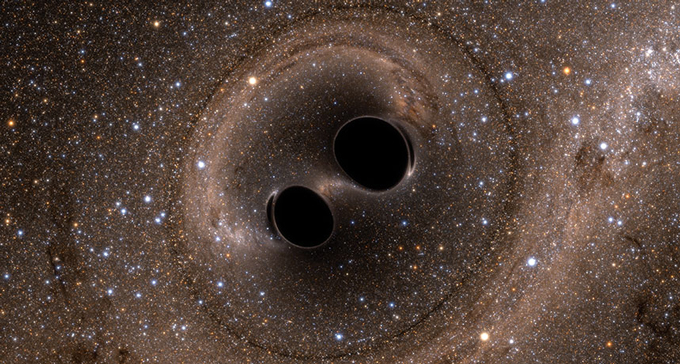

1. Gravitational waves

Nowadays astrophysicists are all agog about gravitational waves, which can reveal all sorts of secrets about what goes on in the distant universe. All hail Einstein, whose theory of gravity — general relativity — explains the waves’ existence. But Einstein was not the first to propose the idea. In the 19th century, James Clerk Maxwell devised the math explaining electromagnetic waves, and speculated that gravity might similarly induce waves in a gravitational field. He couldn’t figure out how, though. Later other scientists, including Oliver Heaviside and Henri Poincaré, speculated about gravity waves. So the possibility of their existence certainly had been imagined.

But many physicists doubted that the waves existed, or if they did, could not imagine any way of proving it. Shortly before Einstein completed his general relativity theory, German physicist Gustav Mie declared that “the gravitational radiation emitted … by any oscillating mass particle is so extraordinarily weak that it is unthinkable ever to detect it by any means whatsoever.” Even Einstein had no idea how to detect gravitational waves, although he worked out the math describing them in a 1918 paper. In 1936 he decided that general relativity did not predict gravitational waves at all. But the paper rejecting them was simply wrong.

As it turned out, of course, gravitational waves are real and can be detected. At first they were verified indirectly, by the diminishing distance between mutually orbiting pulsars. And more recently they were directly detected by huge experiments relying on lasers. Nobody had been able to imagine detecting gravitational waves a century ago because nobody had imagined the existence of pulsars or lasers.

All these failures show how prejudice can sometimes dull the imagination. But they also show how an imagination failure can inspire the quest for a new success. And that’s why science, so often detoured by dogma, still manages somehow, on long enough time scales, to provide technological wonders and cosmic insights beyond philosophers’ and poets’ wildest imagination.